Why This Project Exists

In the not too long future, I plan to travel to a country where internet access can get... opinionated. The stuff I normally rely on (work tools, Google services, even basic apps) can suddenly become slow, unreliable, or straight-up blocked.

At first I did what everyone does: try a couple popular VPN apps and hope for the best. And yeah… it worked sometimes. Other times it got throttled, randomly died, or felt like playing roulette with “will this connect today?”

So this project started as a very practical goal: I wanted something reliable, under my control, and easy to rebuild if (when) it gets targeted or breaks (because it always does at some point). But I also wanted it to be a clean portfolio piece, something that shows I can ship an end-to-end system with proper automation, security habits, and documentation, not just a script that “works on my laptop.”

In short: this wasn't about inventing a new VPN. It was about building a solid, repeatable setup that I can own, understand, and evolve.

Architecture at a Glance

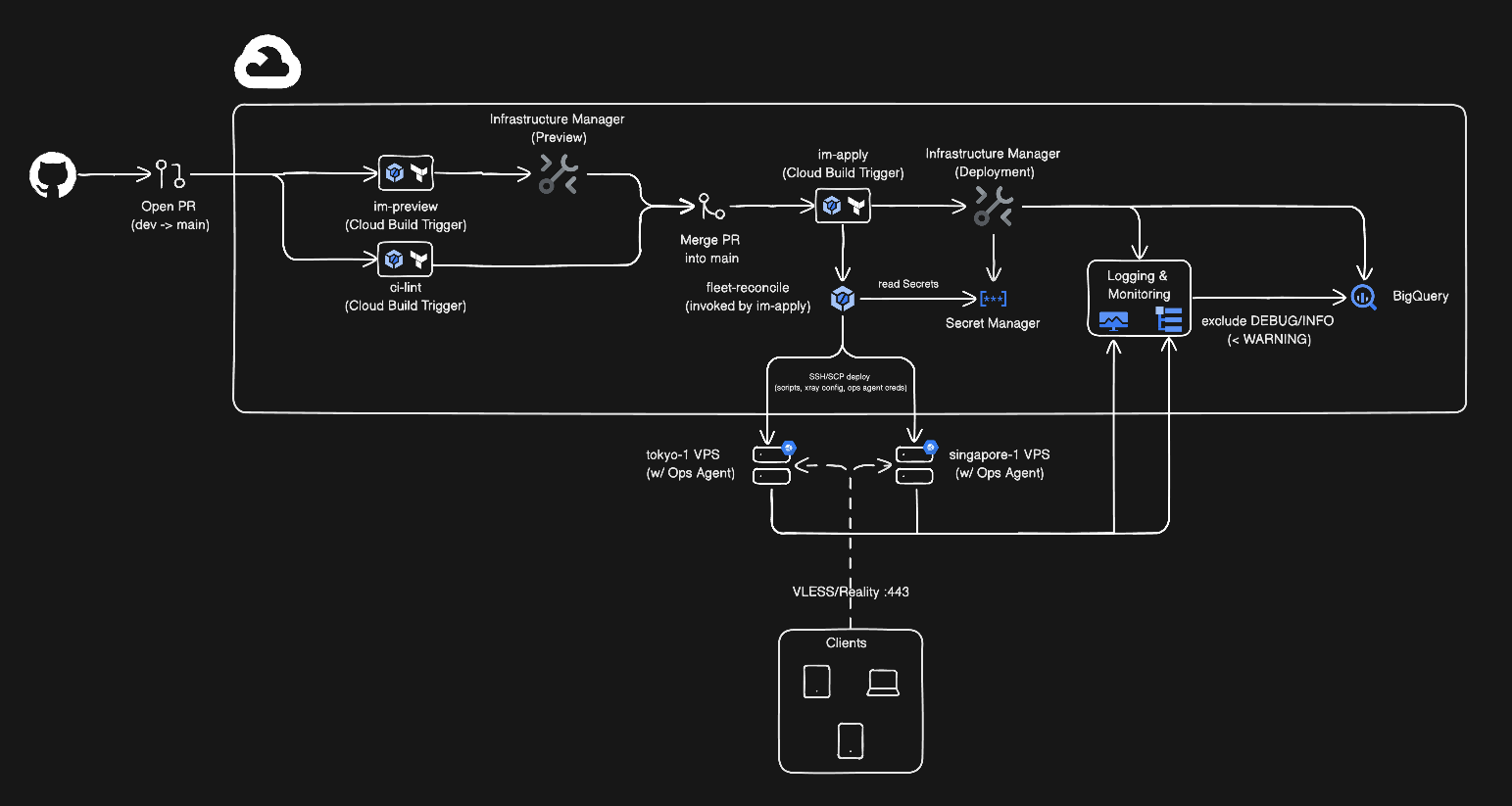

The whole stack lives in code:

• Terraform (GCP) builds the control plane: service accounts, Secret Manager, CI triggers, logging sinks, etc.

• Cloud Build acts like my “operator”: lint/scan, plan previews, apply changes, then trigger deployment.

• Fleet reconcile SSHes into each VPS and makes the servers converge to the desired config (idempotent: safe to run repeatedly).

• Reality handles the stealthy TLS handshake so the traffic looks like Chrome hitting a legit website.

(Architecture diagram goes here.)

Reality check: the chicken-and-egg problem

Before Terraform can “manage everything,” some things must exist first. You can't use automation to create the automation.

So I bootstrap a few pieces manually, then import them into Terraform state (maybe a bit too much to be honest 😅, but sometimes I prioritize speed and iteration over a perfect one-shot, and everything ends up terraformed at the end of the day):

- create the GCP project

- create the Terraform executor service account (im-executor)

- set up the Cloud Build GitHub connection (2nd gen)

- assign a couple IAM roles that the Terraform provider can't assign due to API limitations

- seed the first secret values (SSH keys, Xray creds, etc.)

Confession: I did more “click-first-then-import” than I'd recommend in a perfect world. When you're debugging IAM, the Console is faster than endless plan/apply cycles. Once it works, it gets imported and becomes boring again.

Infrastructure Intentions

My goal was to keep the pipeline boring-in-a-good-way: smallest possible privileges, clean hand-offs, and zero secret leaks into logs.

So instead of one mega-powerful CI identity, each pipeline stage gets its own service account:

| Trigger | Service Account | Scope |

|---|---|---|

| ci-lint | cb-ci-lint | Security scans only |

| im-preview | cb-im-preview | Terraform plans |

| im-apply | cb-im-apply | Apply changes |

| fleet-reconcile | cb-fleet-reconcile | Deploy to VPS |

A couple security choices I'm weirdly proud of:

- • Secret-level IAM, not project-wide: each SA gets secretAccessor only on the exact secrets it needs.

- • Impersonation boundaries: the Terraform executor has broad perms, but only the deployment triggers can impersonate it. Lint can't touch infra at all.

- • No secrets in logs: secrets land in temp files with chmod 600, get cleaned up by traps, and get shredded on the VPS after use.

- • SSH host key pinning: I explicitly don't do StrictHostKeyChecking=no. I scan host keys during setup, store them in Secret Manager, and fail deployments if server identity changes unexpectedly.

- • Least-privileged Xray runtime: Xray runs as a dedicated xray user, configs are locked down, and it keeps only CAP_NET_BIND_SERVICE for port 443.

- • Supply-chain sanity: verify checksums, pin versions, and avoid surprise provider updates.

Every PR hits automated checks: Terraform formatting/validation, linting, security scanners, shell script linting, and secret scanning. If any check fails, the PR doesn't merge.

Protocol & VPS Choices

I skipped WireGuard and IKEv2 because their handshakes are easier for DPI to spot.

- • WireGuard is fast, but DPI can spot its UDP handshake pattern.

- • IKEv2/IPSec has well-known signatures.

- • OpenVPN-over-TLS starts like HTTPS, but diverges enough for advanced DPI to catch it.

Reality takes a different approach: it doesn't wrap VPN traffic in TLS in a way that looks off. It impersonates a real TLS session:

- claims to connect to a legit domain via SNI

- mimics Chrome TLS fingerprinting (cipher suites, extensions, etc.)

- if someone probes the server without valid params, they get forwarded to the real decoy site

- VLESS doesn't add extra encryption overhead, TLS handles that

One practical detail is shortIds. Each VPS has its own shortIds, and I keep 2 to 3 per server so I can rotate clients if one ever gets flagged, without touching server config.

“If you guess my server IP without a valid shortId, you just land on the decoy site. The VPN never reveals itself.”

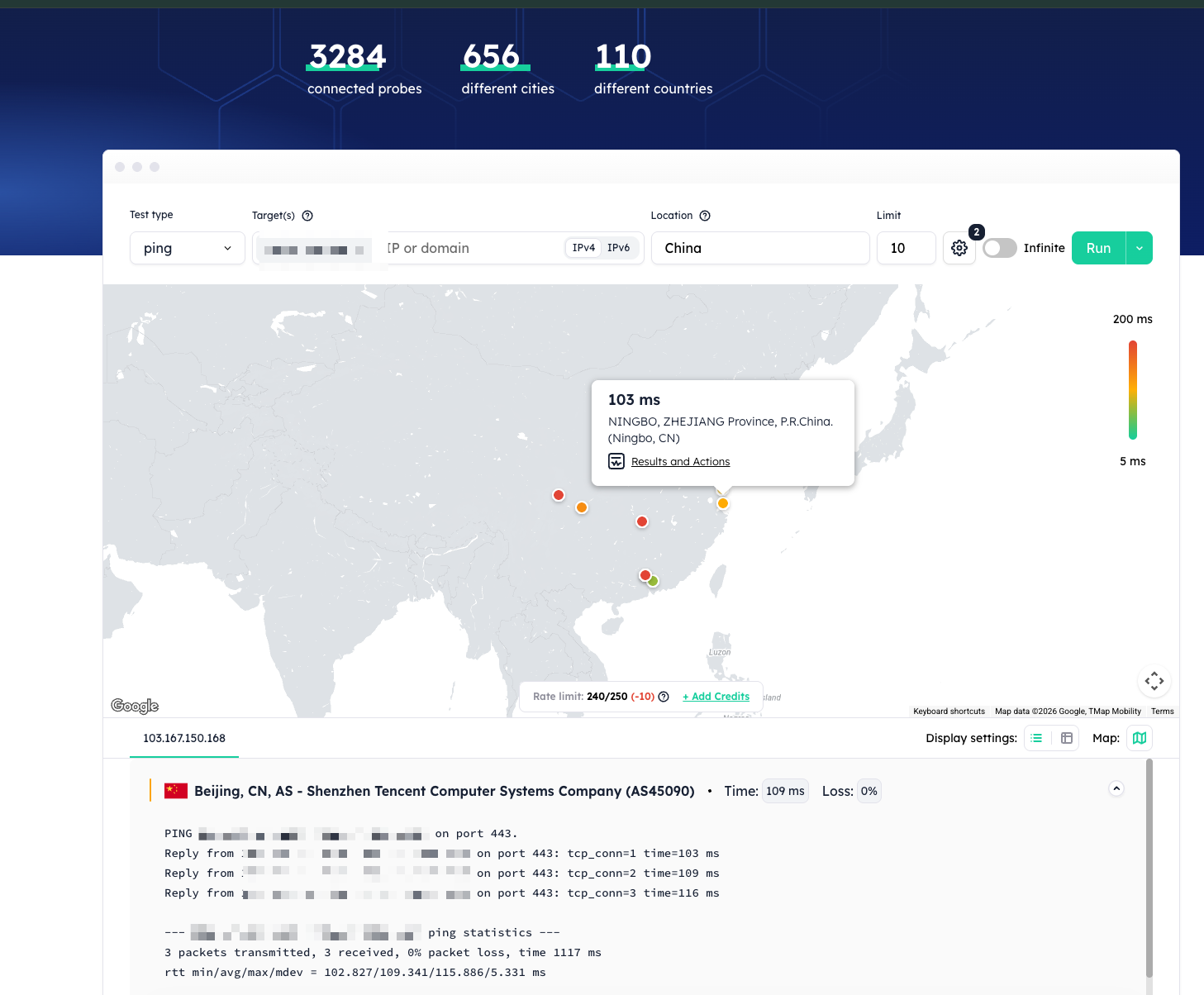

Provider choice matters too. Before renting anything, I test reachability from the destination country (I used globalping looking-glass checks) because reputation and routing can make or break the experience.

Client Setup Snapshots

Once Terraform applies and the fleet reconciler runs, each VPS writes the client connection URL to disk. I SSH in and grab it:

- •

cat /etc/xray/client_url.txt

Then I paste it into my clients:

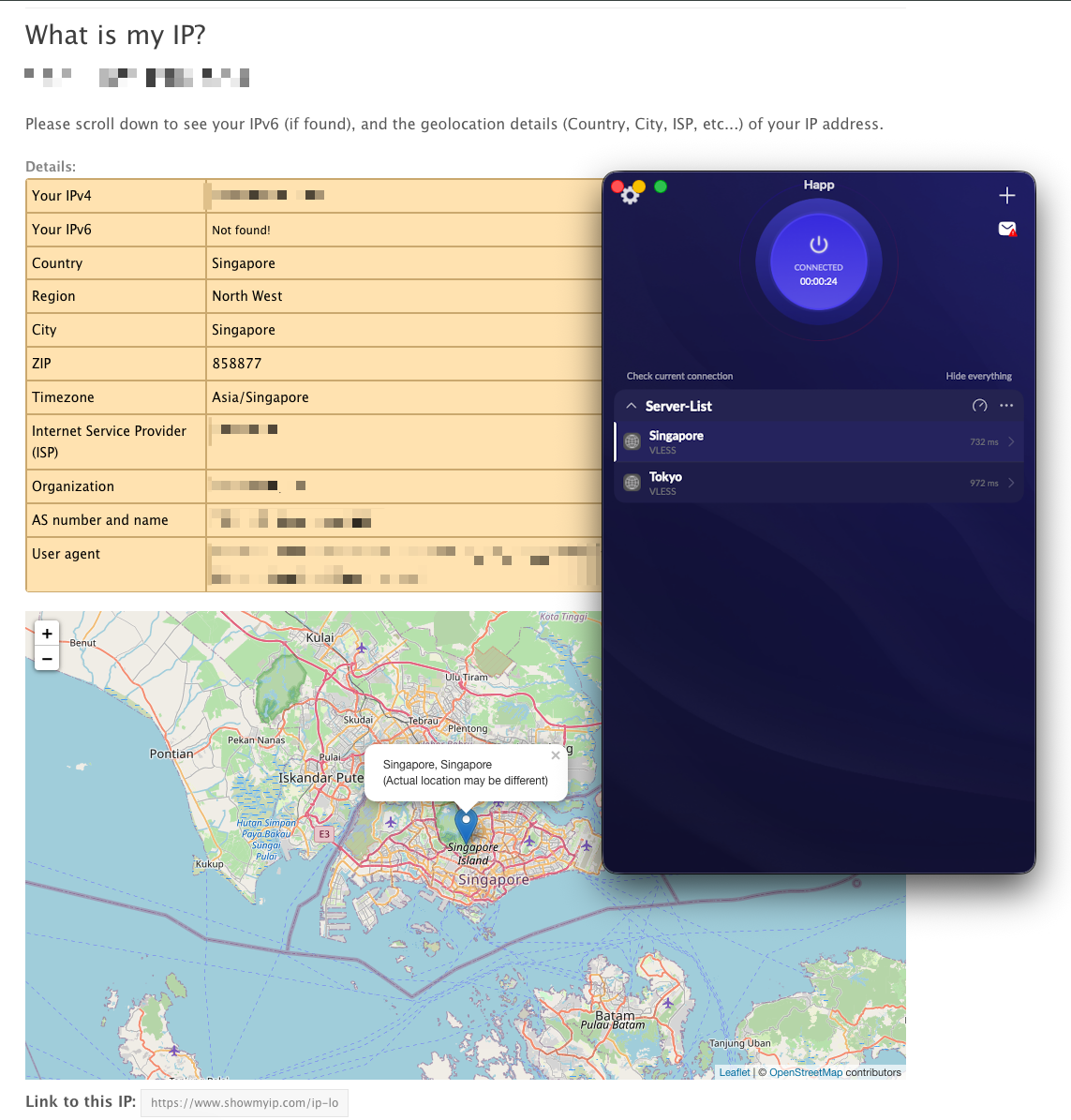

- • macOS: Happ (free)

- • iOS: Shadowrocket (paid, but reliable)

My sanity check is simple: connect the client, then verify the exit IP:

- •

curl -s ifconfig.meshould return the VPS IP, not my local one.

Seeing a Singapore IP in the browser while the Happ client is active is my sanity check that traffic is flowing through the VPS.

More importantly, the flow feels invisible. The TLS handshake looks like Chrome talking to a legitimate site, so DPI has little reason to poke at it. If I ever need to rotate the shortId or regenerate keys, the pipeline does the heavy lifting, my clients just paste a fresh VLESS link.

Monitoring & logging (so I'm not flying blind)

I wanted enough observability to debug issues, without accidentally building a surveillance system for myself.

- • VPS logs go to Cloud Logging, then sink into BigQuery

- • I only capture error-level Xray logs (no noisy access logs)

- • BigQuery tables expire after 7 days to control cost

- • host metrics (CPU/mem) are collected periodically

Estimated logging cost is basically nothing (under ~€0.10/month).

Read the Deep Dive

If you want the actual Terraform layout, Cloud Build triggers, fleet inventory, and the Xray templates/scripts, it's all documented in the repo, including the bootstrap steps so I can re-import resources cleanly next time I need to stand it up.

https://github.com/scottsantinho/vless-reality-gcp